General purpose GPU programming

General purpose GPU programming¶

The seismic industry has profited significantly from rapid hardware development in other industries. One example is the application of Surface Related Multiple Elimination (SRME), when it was first introduced it was doomed as too computationally expensive. Today it is the industry standard for multiple attenuation in 2D and 3D.

Already as an intern, with Fugro FSI, I had several nodes running 16 cores and 96 GB ram at my expense for testing purposes. Yearly, investments of \(5 to \)7 billion are made for new clusters industry wide. We have quite some computing power in our hands, but there’s a limit. The limit is in the design of CPUs.

CPUs are designed to do a variety of tasks. There are so many things we do with our PCs. We finish our PowerPoint presentations, while Skyping across the world. This is what computers are good at; Mostly single sequential tasks.

But there’s something new coming up. Walking the show at the EAGE (2015), I was a bit puzzled to see Nvidia and other companies selling hardware that one might know from computer gaming: Graphics cards. Talking to the sales rep, I learned that these are powered by GPUs instead of CPUs and we might be able to utilize this power in the seismic industry.

However, later I learned, there’s a big problem with GPUs. GPUs are slower than CPUs. They’re not as smart, although they evolved alongside the CPU. In the end, GPUs just always had a different purpose than CPUs. But they’re amazing at one thing; easy calculations, like addition and multiplication. In fact they can do a lot of them quickly and in parallel. Essentially, they function like a big class of 3rd graders.

Working in parallel on different cores, means we have to be smart about dividing our resources. Parallel code rises and falls from good planning. Assigning parallel calculations means, we do not want several of these to have to wait for a single other calculation. High Performance computing (HPC) has really improved on this issue, but GPUs take this to an entirely new level.

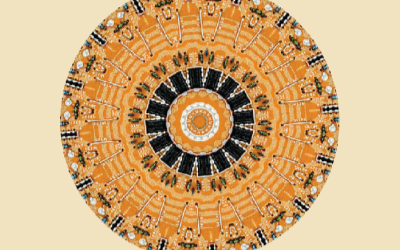

The image shows how CPUs have a lot of space for units for control and cache functionality. Whereas, the GPU uses most of the architecture for the processing functionality. | CC-BY NVidia

Picking up on the analogy of 3rd graders, GPUs are a tad special to work with. When you tell your class to add three sequentially for three times, if you’re lucky you’ll get the right result of 9. This is a task badly suited for parallel computing, but sometimes it’s necessary. Taking this into account, we have to think about memory management. That one slow kid in the class may be still working on the first part of the calculation, but communicate their result the loudest.

GPUs work in quite a similar way, they only have very special kinds of cache (most are even read-only). So when we want our parallelized tasks to work with intermediate results, we have to force the GPUs to do so. Of course this synchronization should only be done when absolutely necessary. Additionally, the GPU programming languages have built-in operations that block the memory of a variable until it is modified. These so-called atomic operations save a lot of hassle.

Memory management is important, when we go into GPU programming. It helps a lot to be fully aware of the hardware we deal with. GPUs work fundamentally different from CPUs and their caching and memory abilities. Learning about the structure of GPUs will enable us to write amazingly fast code.

What we’re talking about here is called General Purpose GPU (GPGPU) programming. There are dedicated languages from Nvidia (CUDA) and the other GPU hardware supplicants, as well as, an open alternative that hooks into these, called OpenCL. Utilizing those, there are possibilities opening up to build highly parallel versions of the most computationally expensive applications we have in seismic processing.

Dedicated GPU cards and even clusters are being sold, opening up new opportunities to get beautiful images out of artifact-ridden seismic data, but it does take work to get there.